Troubling New Data on AI Risks

Today I want to go back to artificial intelligence and some of the policy management and corporate culture challenges that the technology keeps posing. KPMG recently released a wide-ranging survey of how people view and use AI, with statistics that could cast a long shadow over your own AI compliance efforts. Let’s take a look.

The survey, published earlier this month, polled 48,000 people in 47 countries around the world — so, a comprehensive cross-section of people who use artificial intelligence. It asked people how often they use AI in the workplace, how much they trust AI to give reliable answers, whether AI actually does help to reduce their workload, and what people want to see for regulation of AI.

Among the more interesting findings:

- A majority of respondents (54 percent) don’t fully trust AI, but a split emerged between respondents in advanced economies (only 39 percent trust AI) versus those in emerging economies (65 percent). Think about what that means for a global business operating in both types of countries.

- Nearly half of all respondents admit to using AI at work in ways that contravene their employer’s policies, such as by uploading confidential corporate information into public AI tools. Sixty percent say they have seen or heard other employees using AI tools in inappropriate ways.

- Two-thirds of respondents say they rely on AI output without evaluating the information it provides. Think about what that means for your compliance risks, operational decisions, and litigation exposure.

- Seventy percent of respondents say regulation of AI is necessary, but only 40 percent believe the existing laws or regulations in their countries are sufficiently strong for AI-related risks right now. Think about what that means for your risk assessment and policy management challenges in the future.

None of these findings should be surprising, really; thoughtful compliance and audit professionals have known for several years now that oversight of artificial intelligence isn’t where it should be. These findings in the KPMG survey just quantify those gut feelings and bring into sharp relief.

The question for compliance officers, then, is how to take these findings and use them to guide development of your AI policies and controls.

From AI Usage to AI Risks

One striking theme in the KPMG report is the mushrooming use of AI in the workplace, especially since ChatGPT debuted in late 2022. Since that Big Bang moment, the number of employees who say their organizations use AI has more than doubled, from 34 percent to 71 percent.

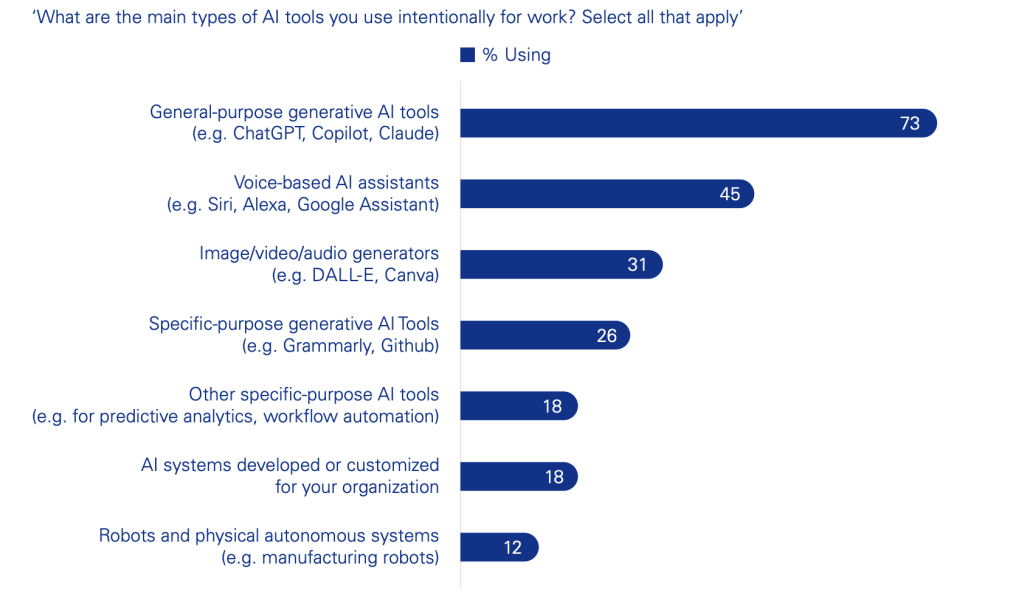

OK, not a surprise — but exactly what types of AI tools are employees using? By far and away, employees say they are using general-purpose generative AI tools such as ChatGPT or Dall-E; or AI-driven voice assistants such as Siri and Alexa. More precise “specific-purpose” tools trailed well behind. See Figure 1, below.

Source: KPMG

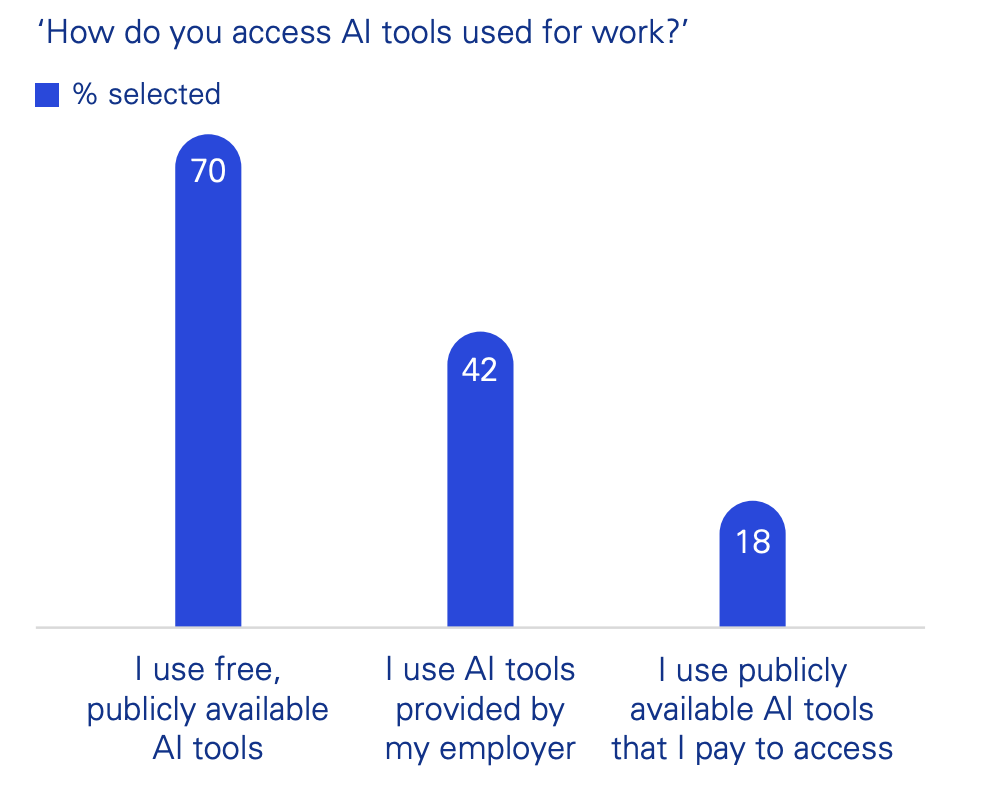

KPMG then asked people how they accessed those AI tools. See Figure 2, below.

Source: KPMG

Put those two findings together, and a complicated picture emerges. On one hand, the company might see AI as a technology to improve specific business processes. Those are the statistics at the lower part of Figure 1, where employees are using AI tools for workflow automation or predictive analytics.

Employees, however, seem to be using AI in quite a different manner. They see “AI” first and foremost as a set of generative AI tools that they can use to help them perform their routine tasks more efficiently — more like the latest, souped-up addition to spreadsheets, word processors, and other desktop tools.

So what does that mean for the AI compliance program you need to build? If employees are using AI in a more informal way, what are the implications for your risk assessments, policies, controls, audits, and more?

For example, take the EU General Data Protection Regulation and its Article 22, which prohibits automated decision-making without a user’s consent. If your company has built AI into its marketing technologies, you’re more likely to be in compliance with Article 22; your marketing software can have controls built into the code to assure user consent.

But if employees’ tendency is to take customer data, dump it into ChatGPT or some similar tool, and then ask, “What price should we be quoting our customers?” that would require a very different set of policies and controls to prevent Article 22 violations.

Simply put, employees’ usage of AI will dictate the company’s risks from AI. Of course that’s self-evident, but now we have more insight into how employees really are using AI — and they’re drifting into usage patterns that could be exceedingly difficult to control.

Controls That Actually Work

Here’s an example of what I mean. One easy response from companies alarmed at the potential risks of AI is to declare an outright ban on using AI at work. No use of AI means no risk of employees dumping confidential data into an inappropriate tool, right?

Wrong. Forty-nine percent of all KPMG survey respondents said they have uploaded confidential company information into publicly available AI tools; but among employees whose companies imposed an outright ban, the percentage of errant employees was actually higher: 67 percent.

In other words, not only do bans against AI not work; they might even drive more employees to use AI tools in unwise ways, since they see the ban as an impediment to them doing their “real” jobs.

So bans are impractical. It’s also possible that mere policies won’t do much better, because policies alone won’t guarantee compliance. To make a real dent in this risk, you’d need more comprehensive guidance that helps employees understand why uploading confidential information is an unwise idea, ideally coupled with dedicated training.

Then again, a company could also reduce the risk of employees using publicly available AI by making it easier for employees to use purpose-specific AI. That is, build your business processes and AI-driven technology to make employees’ work easier; and then they’ll be more likely to follow those business processes as you want, rather than take a short-cut by dumping data into ChatGPT or some other fly-by-night AI service they find.

To go that route, however, compliance officers have to work with IT teams and First Line operating unit leaders to (a) understand the business workflow the First Line wants; (b) understand what the IT team is willing to build and support to manage that process; and (c) weave your compliance concerns into the solution those two groups develop.

None of that is new, really. Anti-corruption compliance officers have grappled with this dynamic for years, trying to build practical anti-bribery and due diligence policies that reflect how the business really works. So have data privacy officers, AML compliance officers, and everyone else in the compliance family tree.

The question now is how to find a path forward for AI compliance, and find it fast — because as this KPMG survey suggests, employees are already finding a path for AI usage on their own.