Governing Third-Party AI Risks

Earlier this week I attended a GRC conference in New York, York, which meant plenty of talk about challenges in cybersecurity, data management, internal control, and (of course) artificial intelligence. One interesting session explored how to manage the risks of third-party AI models within your enterprise. As usual, I took lots of notes, and pass them along now.

First let’s explain what this particular AI risk actually is. It’s the risk that some pocket of your enterprise — say, a business unit looking to streamline workflows or to offer new services to customers — incorporates an AI system provided by an outside vendor into your IT systems, apps, and business processes.

So, how did that vendor develop its AI? What controls does that vendor use to assure that its AI system, which is now crunching through your data, meets all your standards and regulatory obligations? It’s quite possible that your employees using the AI system have no clue. More precisely, compliance and GRC teams need to know those answers for all the AI-infused systems running within your enterprise, which means you need a disciplined process to identify and govern these risks. Which is why this issue gets on the agenda at conferences like this one (the annual GRC conference hosted by ISACA and the Institute of Internal Auditors).

The two speakers in this conference session (Mary Carmichael of Momentum Technology and Sanaz Sandoughi of the World Bank) began with a logical first step: to govern all the third-party AI systems running within your enterprise, begin by taking an inventory of how many AI systems are actually running within your enterprise.

‘Where AI Risk Is’ Ain’t Easy

That inventory step alone is easier said than done. It requires support from your IT department, who should have the ability to monitor your network and see how many applications are running on it (but even that basic capability isn’t always a given).

I’d also go further to emphasize an important point. The task here isn’t just about taking an inventory of AI systems running within your enterprise; it’s knowing when artificial intelligence is added to applications already running within your enterprise — because every SaaS vendor and their uncle now seems to be saying, “We just added AI into our latest upgrade!” Well, you need to be aware of those instances of AI too.

So yes, GRC teams need to know where third-party AI systems are living within your enterprise, but that’s more than just taking an inventory of AI systems. It requires ongoing monitoring of the SaaS vendors you use, so you’re aware of when they add AI into their applications. Whether you do that through contract terms requiring disclosure, controls to evaluate all SaaS “upgrades” before allowing them into the live environment, or some other approach, that’s the task.

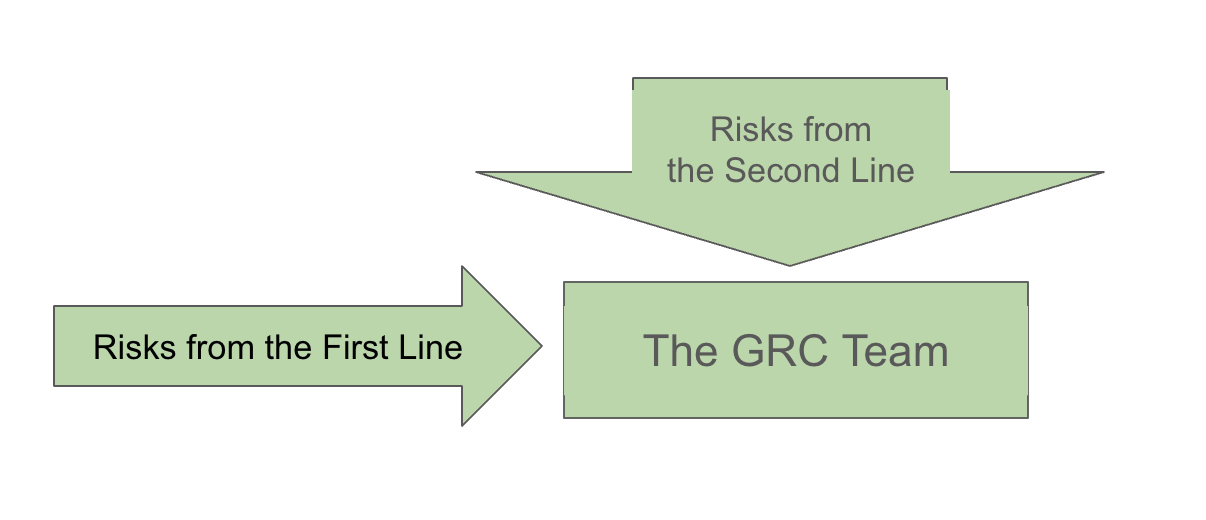

That brings us to a second important point: GRC teams need to appreciate that AI risks are creeping into your enterprise from two different directions.

That is, senior management and the IT team might decide to implement AI across the whole enterprise; but that decision comes from the Second Line of Defense, and is already surrounded by people aware of good risk assessment and compliance practices.

At the same time, you have teams in the First Line of Defense ready to hook up with any promising AI vendor that comes along. Those AI vendors might offer tools and apps that work wonderfully to help your First Line business processes — but you, the GRC leader, have no idea of the quality of those vendors’ AI practices and controls.

As usual, GRC teams are getting hammered from multiple directions.

This just underlines (yet again) the governance challenges that GRC, audit, and compliance teams need to navigate here. You need processes in place to assure that even while those First Line teams are chomping at the bit to embrace AI, you still hold the reins and can steer them in the right direction.

Other AI Vendor Risk Challenges

OK, so now we’re primed to track and inventory all these third-party AI systems converging on your enterprise from multiple directions. What do you actually assess about them? What questions should you ask? Those session speakers Carmichael and Sandoughi had some suggestions on those issues, too.

First, ask whether that nifty new AI tool is necessary at all; maybe it isn’t. Approach the internal team that wants to use the AI tool and ask them: what’s the purpose of this tool? What business objective would you achieve by using it, and what data will that AI system need to process to do so?

Don’t discount the possibility that you might not need an AI system, or that the ROI isn’t worth it. Consider the “total cost of AI” that would be involved, such as the costs of new risk management controls to govern the AI system and its behavior. Consider whether your internal teams know how to use the tool, and whether (as so often happens with SaaS vendors) the vendor might change up its technology, controls, or processes sometime in the future in a way that might make your oversight of the tool more expensive.

(As an aside, here’s a timely MIT research report suggesting that so far, the vast majority of AI investments aren’t producing any big gains for corporations. But the risks are piling up into the sky.)

Second, build the right, AI-centric questions into your risk assessment process. For example, most large companies will end up facing regulations such as the EU AI Act or various U.S. state laws that require AI systems to be tested for bias. You might also need to meet regulatory requirements for explainability (that is, someone can explain how the AI reached its decisions), auditability, and cybersecurity.

There’s more. You’d also need to understand the AI vendor’s approach to data validity, and its ability to segregate protected data from the LLM that serves as the AI’s brain. You’d need to confirm strong access controls, and controls to account for “model drift” over time.

Some of these issues — say, around security, privacy, and access control — are not really new. They’re cybersecurity risks more than they are AI risks, so ideally you should already be posing them to your third parties as part of your pre-existing third-party due diligence.

Put another way: if you’re inventing a wholly new “third-party AI risk assessment process,” then you’re either re-inventing the wheel or you’re late to the game. Third-party AI risks are just the newest type of third-party risk, so this should just be a matter of expanding the third-party risk management program that you already have. (Yes, I’m well aware that “should” is doing an awful lot of work in that sentence.)

One last point. As you try to expand your existing TPRM program to encompass AI risks, presumably you’ll look around for a framework to use. That’s fine. But let’s appreciate that traditional privacy and security frameworks (say, from NIST or ISO) are mostly about access control and data classification.

Those frameworks will help, but AI risks are more about data quality and data usage, which is not the same thing. So ultimately you’ll still need to find a new, more AI-centric framework, such as ISO’s new 42001 standard for AI; and start integrating that into your TRPM program too.