More Notes on the Compliance AI Freakout

Navex released its annual State of Risk & Compliance Report the other week, and I’ve been looking through the findings for insights into what’s on compliance officers’ minds these days. One issue that stood out: how compliance teams are handling artificial intelligence.

We should dwell on this because, as just about every compliance officer already knows, companies are scrambling to figure out how to make AI work within their organizations. Those AI experiments come with a host of compliance and operational risks. The sooner we all figure out what role compliance officers should play in that transformation, the better.

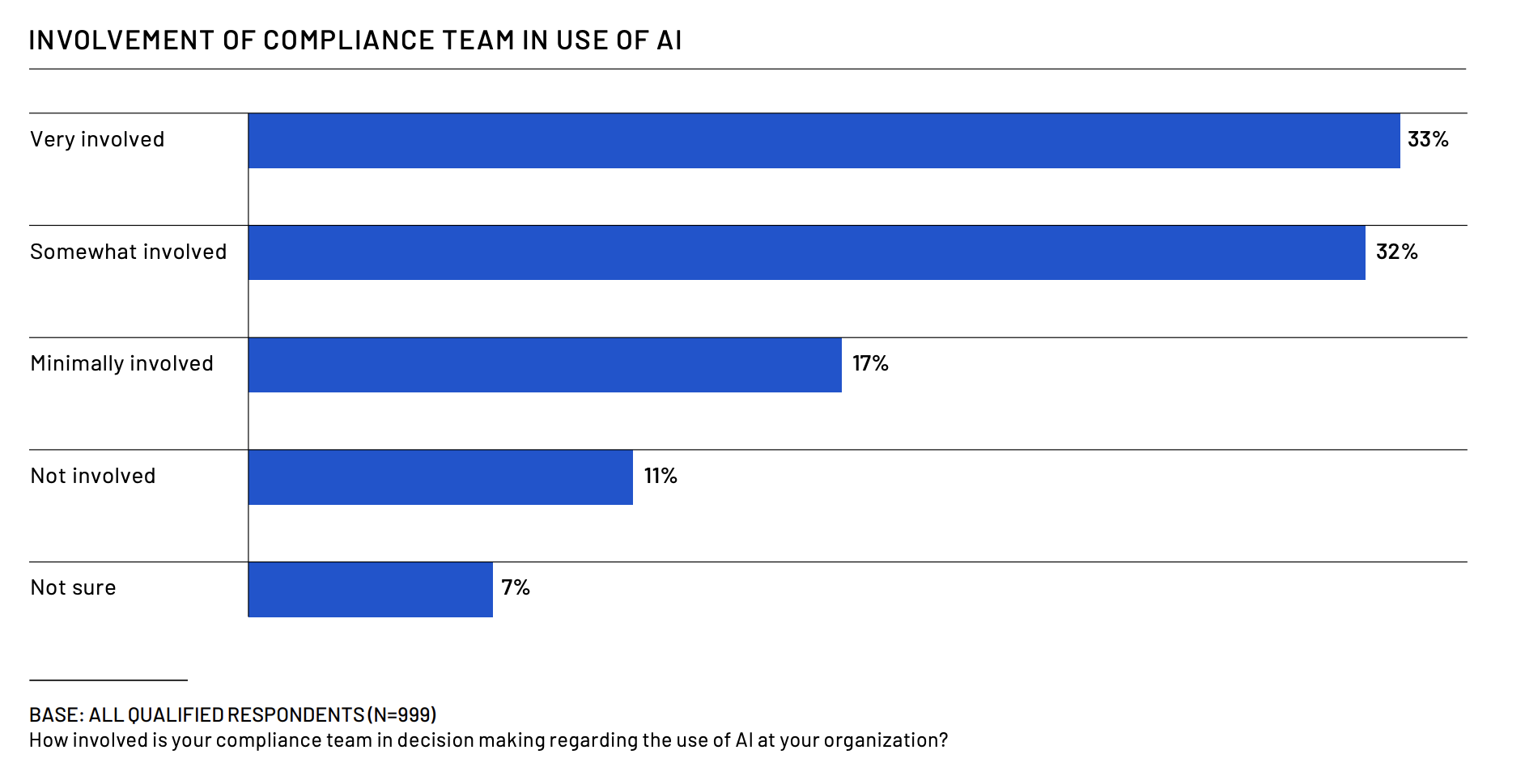

Anyway, back to the Navex report, which polled nearly 1,000 compliance and risk management professionals from around the world and across a wide range of business sectors and sizes. Its key finding: that a solid majority of compliance teams are involved in deciding how AI is used at your organization.

Figure 1, below, tells the tale. Roughly one-third of respondents were “very involved” and another third were “somewhat involved.” Everyone else trailed behind.

Source: Navex

OK, I like the finding that most compliance teams are at least somewhat involved in AI adoption, and a good number are “very involved” — but are you involved in the most useful, productive ways? That’s another important question for compliance officers, and the Navex report wasn’t quite as reassuring to me on that one.

(Disclosure before we go further: Yes, Navex does pay me for various projects, such as writing guest blogs for them from time to time. No, they did not pay me to write this; nor did they see this post in advance.)

Where Compliance Officers Worry About AI

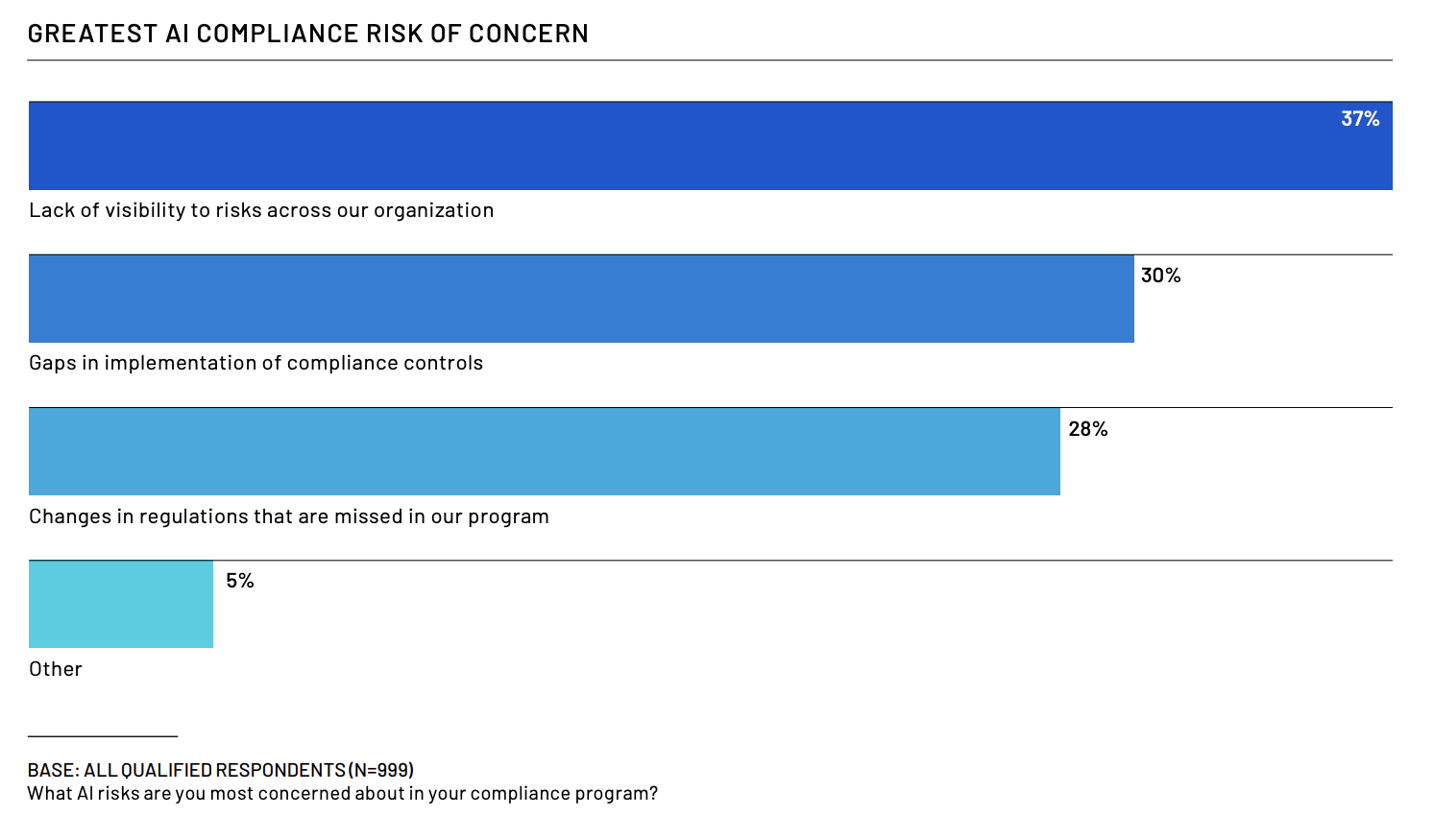

The Navex report also asked compliance officers what AI risks worry them the most. The top answer was lack of visibility into risks across our organization, followed by gaps in implementation of compliance controls. See Figure 2, below.

Source: Navex

None of those findings strike me as wildly off-based, but I’d encourage compliance officers to think about what those answers really tell us.

First, when we see “lack of visibility into risks across our organization” that really means you’re afraid that your AI governance effort isn’t up to snuff.

A strong governance program should allow the management team to see where AI is being adopted across the enterprise. Said governance requires a blend of policies to guide business teams as they experiment with AI, plus monitoring tools (probably run by the IT system) to see where AI applications are running in your extended enterprise. It’s about observing what different parts of the enterprise are doing and building mechanisms to steer them all toward desired goals, without micro-managing each team until everyone hates your guts.

So when we see “lack of visibility,” remember: it means you’re nervous that you don’t know what’s truly happening with AI in your enterprise. That means your governance mechanisms aren’t cutting it.

And from that first worry naturally flows the second: concerns about gaps in implementation of compliance controls for AI. If you’re not sure that you know all the AI risks in your enterprise, of course you’d then worry that your controls structure is no longer aligned to the risks.

We could list a few possible solutions to this concern: better controls testing; better control design (which also means more collaboration among internal audit, compliance, and IT to design those controls); better risk assessments; better tools to track controls remediation and documentation.

That’s all great, but it presumes that you’ve solved those governance and visibility challenges we’ve already mentioned. Compliance, cybersecurity, internal audit, and senior management teams must come together and solve those governance challenges first.

Otherwise you’ll always be chasing after AI risks, pelting them with controls after the fact; rather than getting ahead of AI risks and steering your enterprise forward in a strategic, risk-aware manner, which is what the board and the C-suite want to see.

More Specific AI Worries

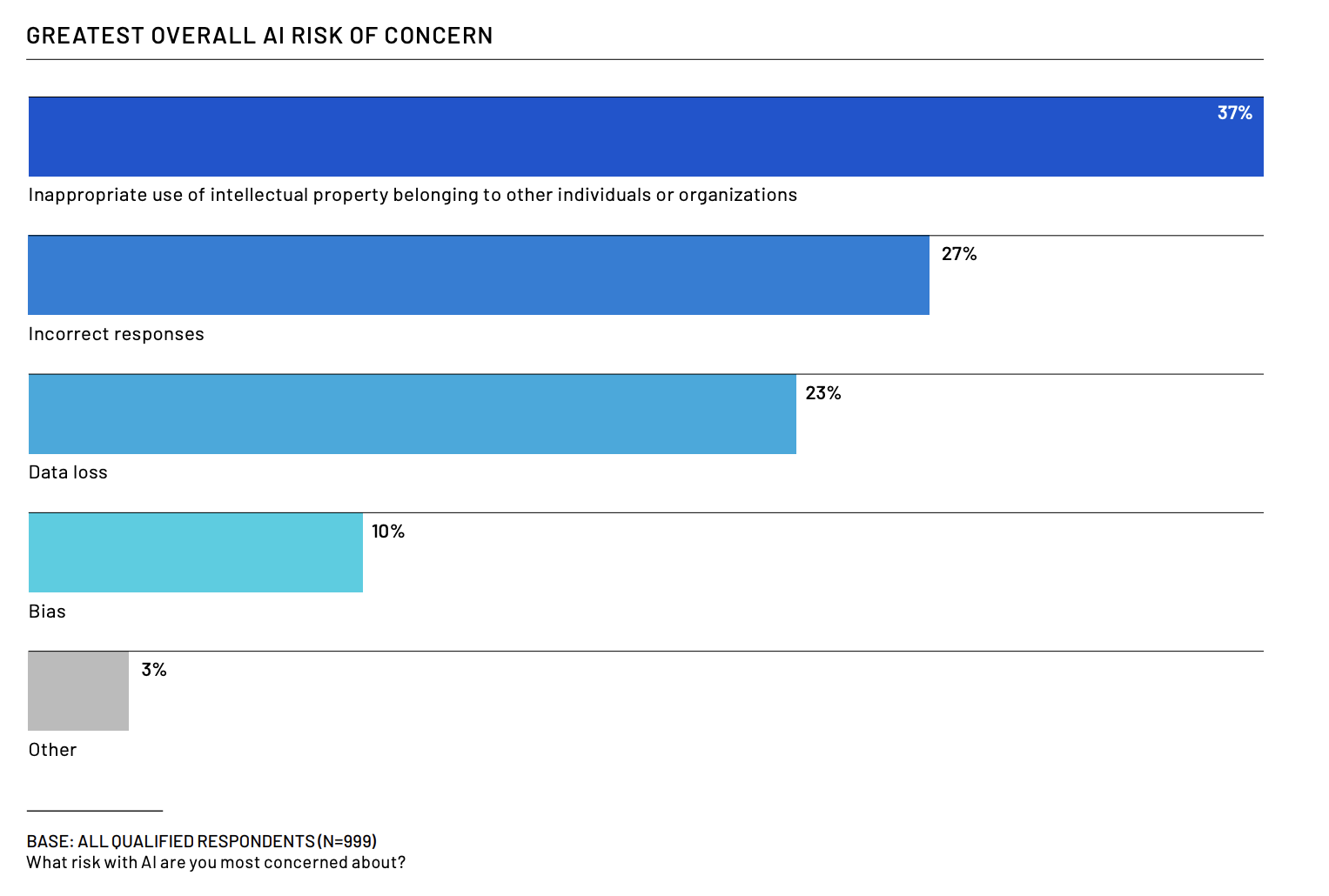

The Navex report then drilled down further into the specific AI risks that worry compliance officers right now. The top concern was inappropriate use of intellectual property or data belonging to others, by an easy margin. See Figure 3, below.

Source: Navex

Now let’s pivot to a recent report from KPMG on exactly how employees are using AI at work. In that report (based on a survey of 48,000 people in 47 countries around the world) nearly half of all respondents admitted to using AI at work in ways that contravened their employer’s policies. Sixty percent said they have seen or heard other employees using AI tools in inappropriate ways.

Next important finding from the KPMG report: that 70 percent of employees routinely use free, publicly available AI tools such as ChatGPT, Gemini, or Claude to help them do their jobs.

Those two findings from KPMG tell me that most employees view AI as something akin to a desktop software tool that they can use to save time on their “real” jobs.

Well, if that’s the case — and I haven’t seen any data or arguments so far to convince me that it’s not — then of course compliance officers should be concerned that employees might be inappropriately using intellectual property or data that belongs to someone else. That’s because employees are taking data and dumping it into ChatGPT all the time, so they can get their work done and punch out early.

Ditto for those second- and third-place worries in Figure 3, too. You’re worried about AI giving incorrect responses? Well, you should; because two-thirds of employees in the KPMG survey said they rely on AI output without evaluating the answers it provides. You’re worried about data loss? Exactamundo, since these freely available tools will gobble up pretty much every scrap of data fed into them.

Two Parting Points

What can compliance officers do with these insights? How do we move forward while in these precarious positions? Two thoughts come to mind.

First, at the tactical level, compliance and IT security teams will need to pay much more attention to data management issues. Your fears about employees taking data they shouldn’t and processing it through AI systems in inappropriate ways is well-founded. Your enterprise will need to be razor sharp on data classification, access control, and related issues.

Alas, compliance officers are not typically well-versed in IT and data management controls. Your expertise is knowing how pieces of information could be used permissibly under various laws and regulations; GRC, IT audit, or cybersecurity teams know the nitty-gritty control issues. So better collaboration between regulatory compliance and IT or data management teams will become more important.

Second, at the strategic level — we should all appreciate that these “AI transformations” are really business process transformations. People are using AI to transform how they work and what they do. Sometimes that happens in a disciplined fashion, when the IT team rolls out an enterprise-wide tool. Lots of times it will unfold in a haphazard fashion, when employees quietly use ChatGPT without telling anyone or a third-party vendor builds AI features into a tool you already use.

So for the next few years at least, when we talk about AI governance, that really means understanding how to re-engineer your business processes to harness AI in a sensible way — a way that still meets your compliance obligations, keeps other operational risks low, fits within your ethical boundaries, and either boosts productivity or cuts costs.

That’s the goal of good AI governance. If the management team doesn’t act now to achieve it, they’ll find that the rest of the enterprise will rush ahead with bad AI governance all on their own.