What If Your AI ROI Fails?

We interrupt everyone’s ceaseless march into an AI-enhanced future to note a recent study which found that using artificial intelligence actually slowed down the performance of experienced software developers rather than enhanced it. For corporations itching to roll out AI tools at scale, these findings raise several questions worth pondering.

The study itself comes from Model Evaluation & Threat Research (METR), a nonprofit group in Berkeley, Calif., that works with AI businesses to study advanced AI systems and how well that cutting-edge software does or doesn’t perform. Long story short, METR found that developers using AI tools expect to cut their time spent on various tasks by 24 percent — but in reality, using AI increased their time spent on those tasks by 19 percent. Quite simply, using AI slowed the developers down.

To be fair, this study involved only 16 software developers, so we can’t call it a large-scale research effort; but it was thoughtfully designed and academically rigorous. METR had the 16 developers complete 246 coding tasks on reputable open-source software repositories that the developers had used before. The tasks were randomly assigned into one group where the developer could use AI tools, and another group where the developer couldn’t. Then METR recorded the developers’ actual work to see how much time they spent on various coding tasks.

The eye-popping result…

- Developers predicted that AI would reduce their “task completion times” by 24 percent before they did the work;

- Those same developers then estimated that AI had helped them cut task completion time by 20 percent after they did the work; but

- Close analysis of the work they did found that AI increased their task completion times by 19 percent.

So why should compliance and audit teams dork out over the results of one small study specifically looking at one AI use-case?

Because it reminds us that at this early juncture, organizations must be quite skeptical of AI implementation. It’s not clear right now that every AI use-case will actually be worthwhile, especially considering all the new risks, hidden costs, and organizational disruptions that AI might bring.

And you, compliance officer worried about new risks or internal audit executive doing a cost-benefit analysis, might end up needing to raise that point.

The Perils of AI ‘Help’

The METR study identified two important issues that people in charge of AI implementation should consider.

First, using AI creates new tasks that will consume a person’s time, even as the AI might streamline other tasks that save the person’s time. We’ll need to identify and measure those new tasks somehow and include them in our ROI calculations.

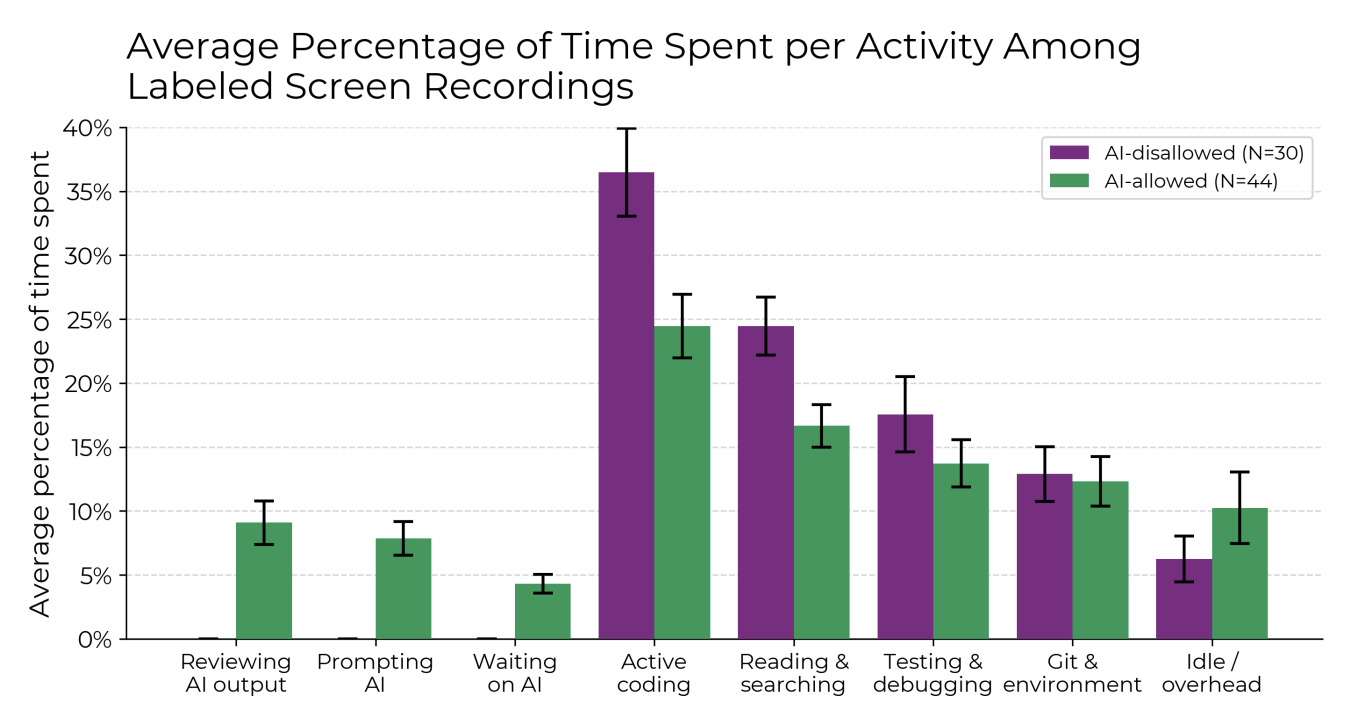

For example, Figure 1, below, shows an assortment of tasks the coders performed. The purple lines were tasks done without AI, the green ones tasks done with AI.

Source: METR

As you can see, AI saved the coders’ time on lots of traditional tasks, such as active coding, searching for information, and testing and debugging. But it also created several new tasks: writing the AI prompt, reviewing the AI output, and just waiting around for the AI to do its thing. Those things all take time, too.

So when you net all those gains and losses together, will the result be a net positive gain in efficiency or reliability? Will you reduce operational, compliance, or security risks in some ways, but create them in others that might negate the value of AI? That’s going to be a crucial question for CISOs, internal auditors, and compliance officers to answer.

Second, it’s possible that AI does help less experienced people with a task more than it helps experienced people already familiar with the work. The developers in METR’s study had an average of five years’ experience in coding and they all knew the software repositories they were using in the experiment. METR knew this; the whole point of the study was to see how much AI might help experienced employees.

This raises the possibility that your AI adoption plans might help some employees more than others, depending on how well they know the task at hand. It could also open the door to a big redesign of jobs and roles overall, so that all the easy, “everyone already knows this” tasks are assigned into one specific role, which then gets automated away by an AI agent.

Either way, those are all important but nuanced factors that will need to be considered by the people in charge of designing smart, durable, risk-aware business processes — and you the compliance, audit, or GRC manager should be among those people.

These AI Issues Applied at Scale

So what can the rest of us take away from this small study about AI and software developers?

Let’s first consider “vibe-coding” — that is, the idea that software developers will describe a piece of software that they want to the AI tool, the tool then writes the actual code, and the developer double-checks the result. The AI enthusiasts in the software world say this will be an increasingly popular way to develop code.

An analogous concept for compliance and risk management professionals would be “vibe-process development” — where a business process is redesigned to let AI do the work, and managers just check the output. How would we identify and address compliance or risk concerns in that sort of work world?

For example, say the marketing team wants to use AI to generate campaigns for consumers; or even closer to home, the legal team wants to use AI for contract review. What role will the compliance, audit, or IT security teams play in that process? What say will you have in what AI will be allowed to do, or won’t?

What if you find that the AI tool needs so much prompting at the start, and requires such close review of the final product, and you need such high standards for data validity and algorithmic bias analysis — that maybe it’s more cost-effective to let the human employees who already know all that material do it themselves?

I’m also reminded of an excellent article from the editor of Corporate Compliance Insights recently, “AI Made Me Dumb and Sad.” The editor, Jennifer Gaskin, honestly tried to use AI to help her summarize long texts and write articles and whatnot, but she spent so much time writing just the right AI prompts, or tweaking output that was fairly good but never just quite right, that she stopped using AI. As Gaskin wrote:

Because I stopped doing these tasks, I got worse at doing them, more disconnected from the reasons why the tasks needed doing in the first place and less enthusiastic about my job, all of which made me a much less happy human being.

So what happens if you develop an AI tool that’s useful for some employees, but others don’t want to use it and can perform perfectly fine without it? Does the company force them to use a tool that degrades their engagement and efficiency? Or if you allow employees to choose their own AI adventure, how do compliance, audit, and risk management functions monitor the risks of both groups?

I’m not saying the ROI of artificial intelligence will never be there; but with each passing day it’s becoming more clear that calculating the ROI of your AI investments is far more complicated than we first understood. It will involve all sorts of trade-offs related to cost, security risks, compliance risks, redesigned controls, and employee engagement.

Maybe at the end of all that, the answer will still be yes. Maybe it will be no. But compliance and audit teams will need to be in that conversation if you want to reach the right answer.