Notes From #RISK Conference

This week I had the good fortune to attend the #RISK New York conference, a two-day event where 300-ish audit, risk, and compliance professionals gathered to talk shop about the evolving challenges of GRC and risk management. We had lots to discuss and I took lots of notes, so let me jot down a few impressions here.

First, I was struck by a comment from longtime GRC analyst Michael Rasmussen, who served as emcee for most of the conference. Rasmussen was recalling a conversation he recently had with the chief risk officer of a large European company. The risk officer had recently taken a call from a GRC vendor, where that vendor breathlessly promised that his product would reduce the chief risk officer’s time spent on various tasks by 75 percent.

“Hold on,” Rasmussen said. “Making investments in GRC technology isn’t about reducing time. It’s about reducing risk. That’s the whole point of what GRC functions are supposed to do: reduce risk.”

Rasmussen is right, of course. The purpose of a GRC function, with all the attendant personnel and technology, is to identify risk within the business and keep those risks at acceptably low levels, so that the business can keep pursuing its objectives.

OK, but is that how you’re framing your GRC mission to senior management and First Line operating units, so that they’ll support your efforts?

A running theme throughout the #RISK conference was that too often, our answer to the above question is no. Instead, GRC leaders still slip into the bad habit of talking about what they do — and the investment dollars they want — in terms of compliance with various regulations.

That’s unwise. It allows senior management teams to talk themselves into not funding a strong GRC program, because hey, maybe that regulation will be repealed, or maybe the Administration will let us off with a slap on the wrist. So while we like our GRC leader, let’s see if he or she can make do for another few quarters with what we already have.

To succeed in your role, you always need to be talking with First and Second Line operating units to understand what they’re doing, and how a risk gone wrong might cause their business processes to go haywire. Then present that information to senior management in a way that lets them see a strong GRC function helps the business to be more durable and resilient in the face of threats, and more agile amid swiftly changing business conditions.

Corporate Culture Matters, Example No. 8,716

Another insight came when a speaker was telling a story you may have heard before. A low-level payment clerk at a large company received what seemed to be a video call from the CFO, ordering the clerk to wire $10 million to an overseas account to complete a merger. Except, of course, that video call was a deepfake.

The payment clerk averted disaster by calling the CFO’s office to confirm the order, and the CFO said no, the video call had been faked. Disaster averted, the astute clerk was hailed as the hero.

As I said, you’ve probably heard this tale before, because it’s happened more than once. But we never talk about the alternative scenario here: that the clerk calls the CFO, and it turns out that the suspicious request is indeed legitimate.

At that moment, two possible futures hang in the balance. The CFO could say, “Yes, this is legitimate, you pest; quit bothering me.” Or the CFO could say, “Yes, this is legitimate, and thank you for taking the extra step to confirm.”

I dwell on this because it reminds us of how much the success of your anti-fraud program depends on corporate culture. You need a senior management that welcomes inquiries like this, so employees will know to raise concerns more often.

That’s always been true; we’ve talked many times before on these pages about the need for management to embrace a listen-up culture. In our new world of deepfakes and other AI-enhanced frauds, however, a listen-up culture will become more important, because AI-enhanced frauds will be so convincing. In response, we’ll need a more skeptical and challenging workforce. So CFOs should expect more calls from employees challenging the orders they receive.

So is your management team ready for that? Do they welcome such skepticism? Or are your senior managers a bunch of gruff “don’t bother me with the small stuff” types? Because if it’s the latter, you’re in trouble.

The Scope of an AI Governance Committee

I also had the honor of giving a presentation at #RISK myself (Radical Compliance was a media partner for the event), on AI governance committees and why they’re so urgently needed as companies rush to adopt artificial intelligence across the enterprise.

Today I want to focus on one part of that issue: Just what are these AI governance committees supposed to do, anyway? What are they supposed to worry about?

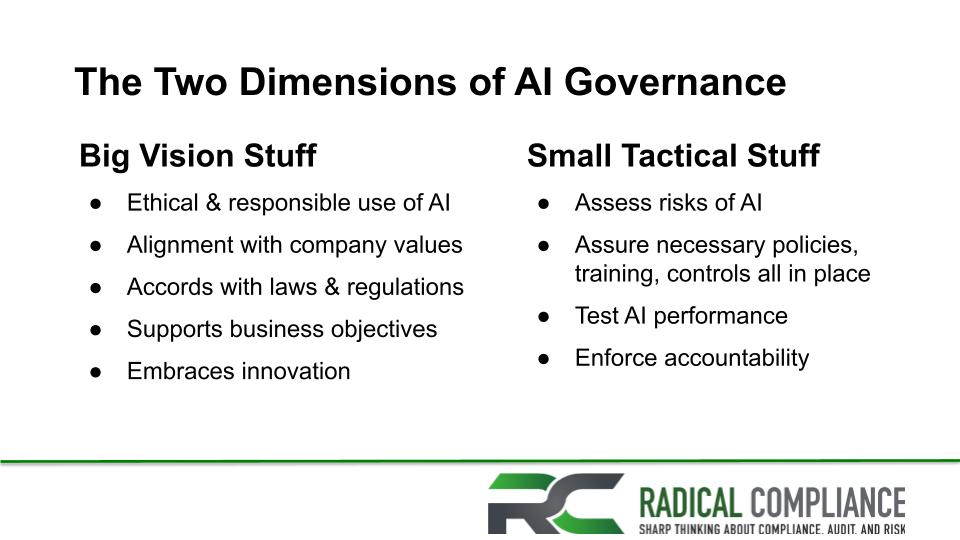

Defining the scope of an AI governance committee can be tricky, because AI risks unfold along two dimensions.

First is what I call the Big Strategic Stuff. This is where you align the company’s use of AI with its ethical values and its business objectives. For example, do you want to use AI to streamline costs and operations, or do you want to use AI to expand into new products and new markets? Either choice is equally valid, but you’ll use different AI systems for each one.

Second is what I call the Small Tactical Stuff. For example, what are the risks of your employees using ChatGPT to create work product? If the marketing team is using it to create new marketing copy, then you might face one set of (relatively low) risks related to copyright infringement. On the other hand, if the engineering team is using it to create code, you might have sky-high risks related to security and product quality. Each use-case would have different risks and controls you’d need to consider.

Your AI governance committee will need to consider both the Big Strategic Stuff and the Small Tactical Stuff at the same time.

Source: #RISK presentation

We could keep going. Your AI governance committee would also need to think about how AI will help the company achieve its business objectives, which entails one set of risks; and how employees will actually use AI in their daily routines, which entails another set. Then go about the business of defining those risks clearly, setting risk tolerances for each one, and implementing controls to keep risk levels in check.

None of that is easy, but with the right AI governance committee, at least you can go about the work in a straightforward manner.

Anyway, congratulations to the #RISK conference organizers for a solid and informative event. They’ll be having another one in Los Angeles in October and then in London in November. If your schedule allows, they’re worth attending!