Study Ranks AI Models on Compliance Tasks

We’re back to artificial intelligence today, with fresh research on how well various AI models perform at tasks that compliance teams encounter on a daily basis. The bottom line: yes, just about all AI models are generally good at lots of compliance work, but integrating AI into compliance workstreams is still going to be a complicated undertaking.

The research comes from compliance software vendor EQS, which today released a study that ranked how six large-language models (LLMs) performed at more than 120 compliance-related tasks. The result is that Google’s Gemini 2.5 seems to be the best AI brain for compliance work overall — but other AI models occasionally scored higher at specific tasks, and the rapid evolution in LLM performance means that no single AI model might sit atop the rankings for long. That will have big implications for how you figure out which LLM to weave into your compliance program.

Let’s first look at what EQS did.

The company teamed up with Berufsverband der Compliance Manager (BCM), a German association of compliance professionals, to identify 120 separate compliance tasks grouped under 10 major categories: regulatory change analysis, risk assessment, control design, conflicts of interest management, and so forth. BCM then tested the six major LLMs dominating artificial intelligence today at those tasks, to generate performance scores from 1 to 100.

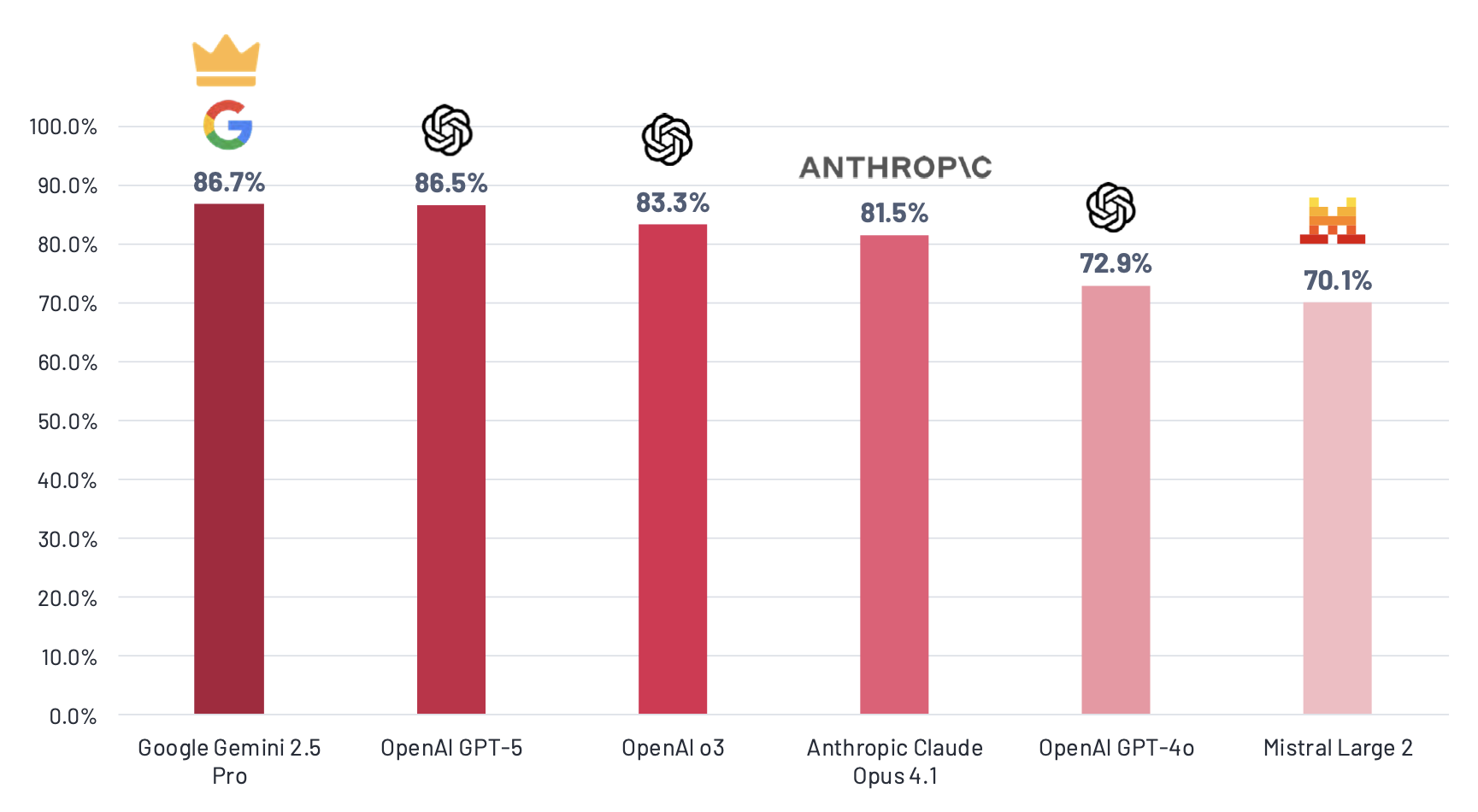

The headline, as we mentioned earlier, is that Google’s Gemini 2.5 had the best overall score, at 86.7 percent. ChatGPT 5.0, the latest model from OpenAI, was a close second, with other LLMs trailing behind. Figure 1, below, shows the overall scores for all six models tested.

Source: EQS

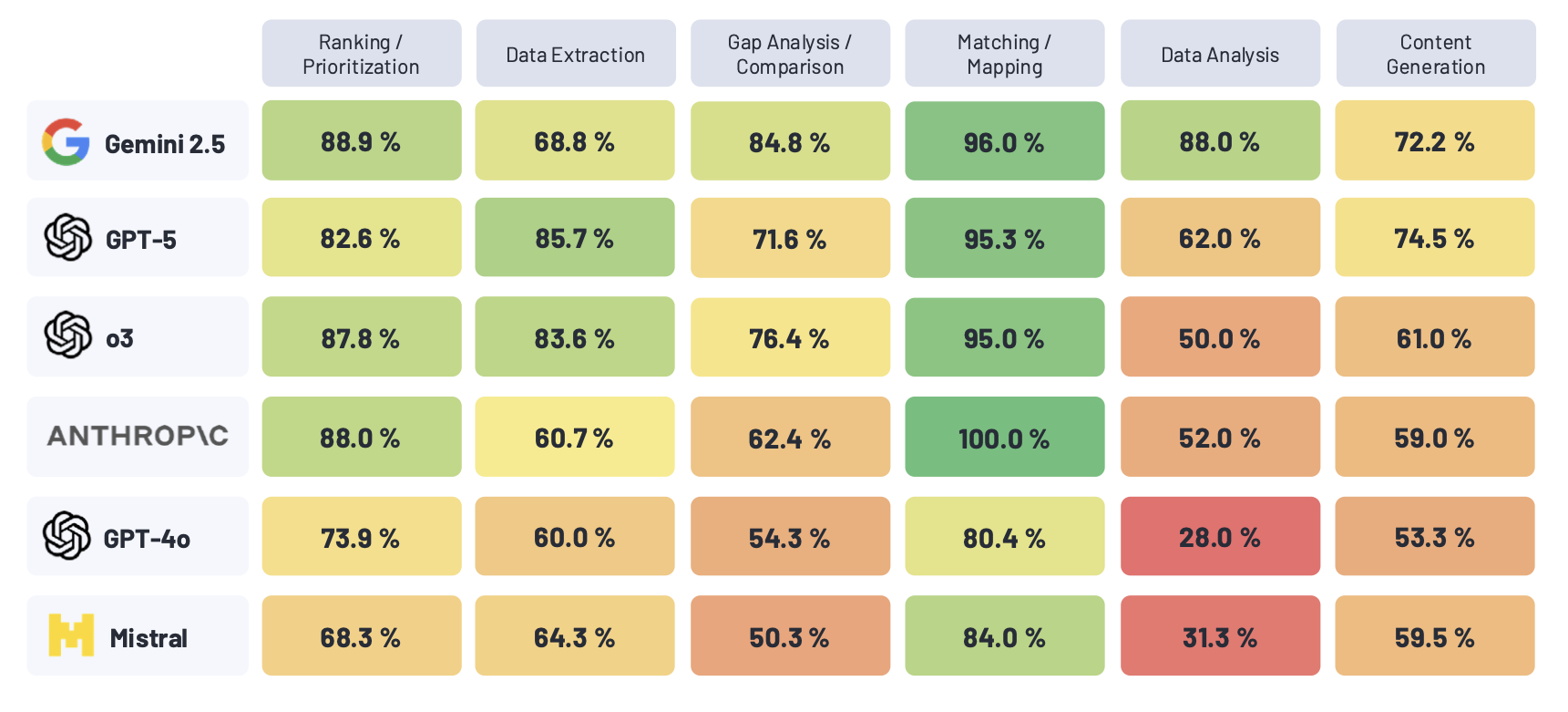

That does not mean we should all rush out to use Gemini 2.5 as the foundation for our AI-enhanced systems. EQS and BCM also studied how the six LLMs performed at various types of work, such as mapping datasets, making decisions according to sets of rules, gap analysis, and content generation. They found that some models outperformed Gemini at some tasks, but not at others.

Figure 2, below, shows how the six LLMs scored on six types of work. As you can see, Anthropic scored higher than Gemini at data extraction, but scored much worse at data analysis. ChatGPT 5 scored better than Gemini at data extraction and content generation, but a smidge worse at data mapping and much worse at gap analysis.

Source: EQS

So should you use Gemini because it’s the best overall, even though it performs worse at some types of work? Or should you use a model that scores high on the types of work your compliance program encounters often, and overlook the worse performance at other types of work that your program doesn’t? Should you cobble together multiple LLMs in one IT environment, even if that means more IT governance and security challenges?

Those are just a few of the strategic questions facing compliance officers as you try to integrate AI into the programs you run. More are coming, and you’ll need to answer them somehow.

Can AI Replace Us Yet? Not Really

Aside from the overall scoring above, EQS and BCM tested the six LLMs on a wide range of specific tasks that compliance teams do on a routine basis, to see how well the LLMs could handle that work. This is important because it helps us understand how well AI could perform as an “agent” in your compliance program, doing work that historically would be done by human employees.

The short answer is that AI can handle some tasks almost flawlessly, but other tasks with much less accuracy or reliability. So stringing together a sequence of tasks into a complete process that AI can handle — well, that’s still iffy right now.

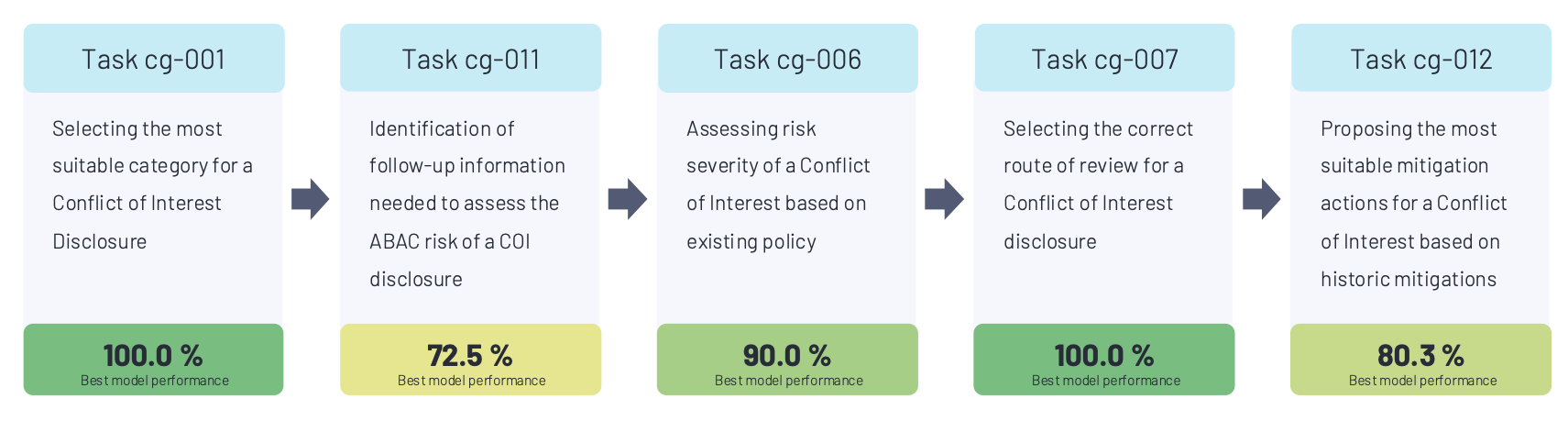

Take conflicts of interest as an example. The study broke down the process of handling a conflict of interest into five discreet tasks that happen in sequence. AI was perfect at the more regimented, rule-based tasks, such as selecting a suitable category for an employee’s conflict of interest disclosure. It was much worse at judgment-based tasks, such as identifying extra information to collect to evaluate whether a disclosure constituted a corruption risk. See Figure 3, below.

Findings like that leave compliance officers in a frustrating position. Perhaps you could use AI agents to manage your conflicts of interest program if your anti-corruption risks are generally low. Or maybe you stick with your current program for a few more years until AI improves enough to score higher on those more challenging tasks. Or maybe you can live with an AI that isn’t great at proposing mitigation steps if you have sufficient human personnel to do that work, or you audit the AI’s mitigation plans closely to confirm whether those plans make sense. Or maybe you scrap the notion of AI agents entirely, and keep using humans for the work but give them AI tools to do that work more efficiently. (Assuming you train them on how to use AI wisely, which many employees seem not to be doing right now.)

At least for the foreseeable future, integrating AI into your compliance workflow processes will require CCOs to consider trade-offs like this. AI will be naturally better at some tasks, humans better at others. The chief compliance officer’s job will be to figure out the best “configuration” of AI and human ability within each process, based on the resources you have and the risks your company faces.

Third-Party AI Risk

For all this talk about LLMs and their abilities, however, we should remember that for many companies in many instances, you won’t be the one using the LLM — your technology vendor will be. Which brings us to the nettlesome issue of vendor AI risk.

For example, say you have a vendor that provides software to manage your conflicts of interest program. (EQS is one of many compliance vendors that does this.) The vendor provides the technology system to you at some fixed price, and you do the work on the vendor’s software with your data.

Then the vendor introduces AI into its product offering. That means the vendor is buying the AI brainpower of some LLM out there (probably one of the six studied above) essentially at “wholesale” price, and selling its AI-enhanced compliance service to you at a marked up retail price.

Well, do you get to know which LLM your vendor is using? Probably, if you ask during the sales process or insist on disclosure in your contract. Are you informed any time the vendor changes its IT from one LLM to another? Possibly, if you insist on that disclosure in the contract.

Even if the vendor discloses the LLM it’s using, a more important question is how you obtain sufficient assurance that the vendor’s AI meets your security and performance expectations. Like, are you going to review whatever training protocols the vendor used on its AI systems? Are you going to review its data validation controls or its output testing results? Are you going to test the AI yourself for its vulnerability to prompt attacks?

Most compliance teams won’t have the knowhow or manpower to do this. You’d need to rely on your IT or internal audit team for help, and they might not have the time or knowhow to do this either. Your ability to assess the AI risks of the vendors you use is going to be a significant challenge before you can truly embed AI into compliance processes — and given the potential risks of AI gone wrong, a challenge not to be trifled with.

In theory, the vendor industry could try to answer this problem by adopting something like a SOC II audit; those are the audits that you can ask a vendor to provide for assurance over its cybersecurity controls. What the GRC community needs is a SOC report for artificial intelligence, which would encompass cybersecurity and much more (data validation, algorithmic bias, model drift, and agentic misalignment, to name a few).

Right now we don’t have anything like that, so the burden defaults back to compliance teams and any reinforcements you can grab from your friends in internal audit or IT security. Which means AI adoption will proceed cautiously, with a fair bit of paranoia about whether you’ve identified and controlled all your AI risks effectively.