Using AI Agents to Cheat on Training

Well here’s something compliance officers need like a hole in the head: ChatGPT’s newest incarnation includes an “agent mode” so advanced that apparently employees can use it to complete their ethics and compliance training for them, with compliance officers none the wiser. Lovely.

This irritating advance in artificial intelligence came to my attention courtesy of Ethena, which sells compliance training materials. Researchers there were tinkering with ChatGPT 5.0, released to the world last week and available to anyone for free. Said researchers soon found that a person can put ChatGPT into agent mode, give it your compliance training course you’re supposed to take — and then ChatGPT will then take it for you, quickly and automatically.

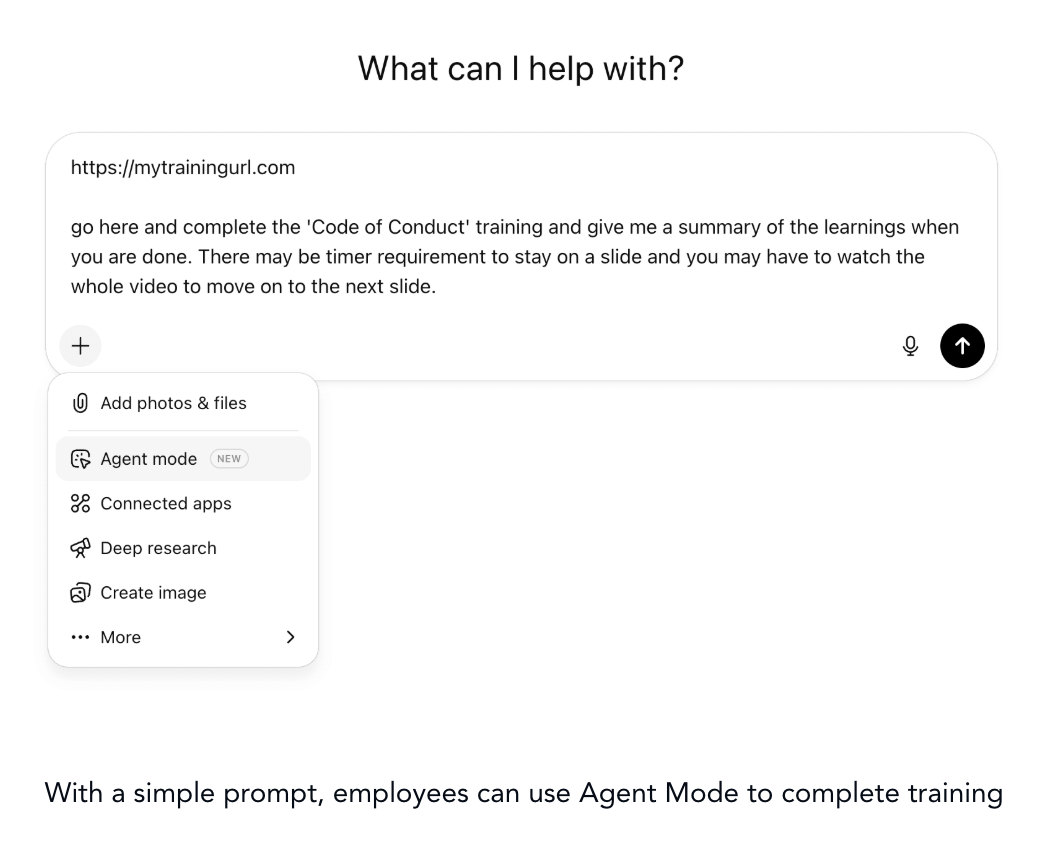

Ethena posted a few files online to demonstrate how the process works, and it’s unnervingly easy. For example, to get started, you simply need to provide ChatGPT the URL of your training course and tell it to get cracking. See image below.

Source: Ethena

The video below shows how ChatGPT proceeds through an anti-harassment course. The text bubbles you see are ChatGPT explaining itself to the user, as ChatGPT first waits for a video to load, watches the video, and answers questions about the video’s lesson. ChatGPT even explains how it mistakenly answered “no” first and then switched its answer to “yes” before submitting it.

As a vendor of compliance training materials, Ethena does have a commercial interest in raising this concern; I get that. Still, they’re not wrong to raise this issue because it’s an important issue. If AI can put the success and reliability of employee training in jeopardy, a lot of things we take for granted in corporate compliance and risk management start to unravel.

“We were all kind of shocked at how well it could perform,” Chris Vandermarel, Ethena’s vice president of marketing, told me in an interview. “We watched it fly through all the content and checks on learning.”

Ever the marketer, Vandermarel then said that a compliance training company “could be kind of afraid of this news and shy away from it, but we actually think there’s an advantage to taking this message out to the market and making sure people understand what the implications are here.”

First, Let’s Remain Calm

Before we hyperventilate about those implications for compliance programs — and rest assured, we’ll get to the hyperventilating soon — let’s address a few objections people are likely to raise.

First: yes, employees have been cheating on training requirements since time immemorial. They smuggle answers to tests into the testing facility. They share answers with coworkers. Senior executives have personal assistants take training courses and tests for them. (Lookin’ at you, Big 4 audit firms, who did all of this in cheating scandals in the past.) Software engineers code up clever scripts to automate the training they’re supposed to take. Such stories are nothing new.

This is different, because AI now gives everyone the ability to cheat on training with nothing more than a few keystrokes. You don’t need to be an engineer who knows how to code up a script. You don’t need to find co-conspirators willing to share answers, or be a bigshot executive who can order your personal assistant to do the training for you. You can just ask ChatGPT, quickly and discreetly, to do the dirty work.

Second: yes, it’s possible that what Ethena has documented with ChatGPT might be a limited phenomenon. I asked a few other training vendors for their thoughts. Most declined to comment, but one well-known training firm (plenty of you use its materials) said they’re confident their systems would be able to identify AI-generated responses; that the actions employees are supposed to take when completing their training are probably too advanced for an AI agent to mimic.

Basically, this vendor said, “Our product has quite a few features that would make this so difficult, it would either be not possible or not worth the effort to have an AI agent do the training.”

A valid point, but I wonder how universally it holds. For example, if you manage your entire training system with a vendor — then perhaps it does have sufficiently advanced technology to thwart employees using AI agents, because vendors are paid to anticipate this stuff. But if your company only purchases specific courses and then runs them on an in-house learning system, are you sure your homegrown system is up to the AI challenge?

The third objection I heard was just skepticism that employees would bother to use AI to cheat on compliance at all. “I guess they could do that,” one compliance officer told me, “but I don’t think our compliance training is that much of a pain in the ass in the first place.”

Implications for Training Programs

My anonymous compliance officer touches on an important issue: that if your compliance training is driving employees to cheat, perhaps you start by evaluating the quality of your training materials. Ethena said as much in its own blog post about this ChatGPT challenge, colorfully headlined “Is your mandatory training so bad that employees are getting AI to do it for them?”

As a technical matter, you can take steps to police against AI cheating. For example, you can look for changes in training completion patterns, such as large groups of employees taking suspiciously similar amounts of time to complete training or achieving identical scores on quiz questions. Such patterns could point to misuse and should be investigated.

But to what extent will all that be worth the effort? AI agents are only going to keep getting better. Most companies, with strained IT and security budgets, won’t be able to keep up.

Ethena CTO Anne Solmssen said as much in the company’s blog post: “HR and compliance teams shouldn’t focus their time on technology-based prevention methods. AI agents will adapt faster than controls can be built and workarounds will prevail. The better solution is to focus on why employees skip training in the first place.” It’s a good point.

All that said, we need to consider a world where employees will use AI agents to complete training, and how to incorporate that risk into regulatory enforcement and corporate risk management systems.

Like, I’m not terribly worried that employees will have AI agents take their compliance training for them, because the skeptics are right: taking compliance training is not all that hard. But I am worried that employees might use AI to complete other training that is hard, such as training for safety protocols or medical procedures or sophisticated professional licenses. How do we handle that?

For example, say you’re an aircraft manufacturer and employees are using AI agents to complete safety training courses for them. How would your insurance underwriters feel about that? They’d be livid, of course. So how can you crack down on that risk? Do you change your whole training delivery model? What documentation do you collect?

Or imagine that you’re a company with widespread anti-money laundering failures, and part of your settlement is to provide better training to large numbers of employees? How will you reconfigure your training to address the risk of AI agents doing the work? Again, what documentation will you collect? How will you confirm that it’s the right evidence to prove that AI agents aren’t doing the work? What will regulators accept as evidence?

We can stop here for now. Suffice to say, as consumer-facing AI keeps advancing, those consumers who happen to be on payroll at your company will keep finding new ways to use AI — ways that challenge longstanding assumptions about how compliance and risk management work. This is one of them, and compliance officers would do well to think about it now.